Integrate Memory into Chatbots with Mem0

Chat applications can benefit from remembering key information about a user. Having such information allows an application to craft personalized responses based on past exchanges. It can also save users’ time by not necessitating the repetition of key information. Sending a message history to LLMs and managing conversational state with entities helps facilitate exchanges, but can fall short. An LLM may get more confused and less reliable as a conversation becomes too long or convoluted. Managing entities may become unwieldy as more become necessary and the conversation becomes more dynamic and open-ended. A dedicated memory layer can offer a flexible approach to helping a conversational system remember and use key information about its users.

Mem0 is an open-source memory layer tool for conversational experiences. It is pronounced “mem-zero”. It facilitates storing memory records in one or more databases and retrieving those memories based on a user’s query. This provides it a powerful way to draw relationships between information and help an LLM generate personalized responses. We’ll set up a local testing environment for experimenting with Mem0.

Set up LLM

Mem0 supports a variety of LLM providers. I’ll focus on Ollama throughout this article. If you aren’t able to run models locally with Ollama, you can use OpenAI instead. I’ll note how to change the setup to use OpenAI, as well. Pick the option that works best for you.

Ollama

To get started, install Ollama. Next, you’ll need to pull a few models. It will take some time to download these models.

>>> ollama pull llama2

>>> ollama pull llama3

>>> ollama pull nomic-embed-textOpenAI

To use OpenAI, you should add a valid OpenAI API key to an .env file. Install dotenv to use the key in the script.

>>> pip install python-dotenvPrepare the Vector Database

The vector database will store the memories Mem0 creates. You have a variety of options for vector databases. We’ll use Qdrant for now.

Qdrant is available on Docker, so we don’t need to set it up ourselves. If you haven’t worked with Docker, you can view its installation guides to set it up. The command below will pull a Docker image of Qdrant and run it for you.

docker run --rm --name memory-test -p 6333:6333 -p 6334:6334 -v $(pwd)/qdrant_storage:/qdrant/storage:z qdrant/qdrantYou can shut down the database instance by pressing “CTRL-C” in the terminal you launched it from.

Configuring Mem0

Mem0 works by saving key information into vector or graph databases after processing a user’s message with an LLM.

For the vector database, Mem0 uses an LLM to extract key information to create or update memory instances. It will not save the user query itself, but rather will create one or more memory instances based on the query. Llama 2 and llama 3 will be the generative models that facilitate this processing. Once a memory is found, Mem0 will embed the memory using an embedding model (ex. nomic-embed-text). Finally, Mem0 will save the memory instance(s) into the database (i.e., Qdrant).

Let’s start setting up a script. Create a Python file called memory_test.py. You’ll need to add a configuration object to that file. The config will differ depending on if you are using Ollama or OpenAI.

Ollama

The config specifies the vector store, llm, and embedding model. It is necessary to put the Mem0 API version in the config, not the package version.

config = {

"vector_store": {

"provider": "qdrant",

"config": {

"collection_name": "memories",

"host": "localhost",

"port": 6333,

"embedding_model_dims": 768,

},

},

"llm": {

"provider": "ollama",

"config": {

"model": "llama2",

"temperature": 0,

"max_tokens": 8000,

"ollama_base_url": "http://localhost:11434",

},

},

"embedder": {

"provider": "ollama",

"config": {

"model": "nomic-embed-text",

"ollama_base_url": "http://localhost:11434",

},

},

"version": "v1.1",

}

m = Memory.from_config(config)OpenAI

For an OpenAI setup, the config defines a vector database and LLM settings. It also includes the Mem0 API version, not the package version. Also, you’ll need to make sure your script can use the OPENAI_API_KEY in your .env file.

from dotenv import load_dotenv

load_dotenv()

config = {

"vector_store": {

"provider": "qdrant",

"config": {

"collection_name": "memories",

"host": "localhost",

"port": 6333,

"embedding_model_dims": 1536,

},

},

"llm": {

"provider": "openai",

"config": {

"model": "gpt-4o",

"temperature": 0.2,

"max_tokens": 1500,

},

},

"version": "v1.1",

}

m = Memory.from_config(config)Adding a Memory

To understand how Mem0 works, we’ll use some simple simulated messages about locations a user visited. Add the following to your script.

statements = [

"I have visited Germany",

"I have visited Paris",

"I have visited Tokyo",

"I have visited Seoul",

"I have visited Toronto",

]Mem0 gives you access to methods to create, update, read, and delete memories. You can build complex logic around these methods to ensure the memory works in the way most suitable for your application. Let’s just attempt to add each user message to the memory store. The Mem0 “add” method takes in a message and a user.

You can pass in the message itself, but it Mem0 will convert it to a list of dictionaries as below.

user = "test_user"

for message in user_conversation:

print(f"Adding: {message}")

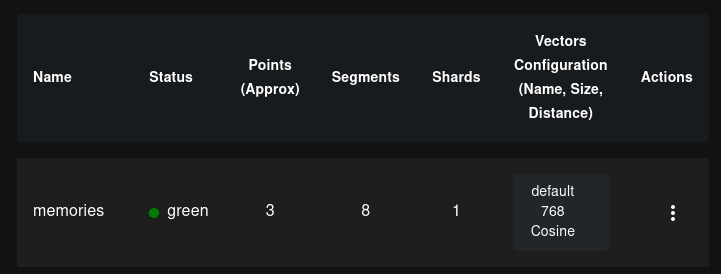

m.add([{"role": "user", "content": message}], user_id=user)Try running the script. The script ran through each message without any error. To verify it worked, check the Qdrant database’s dashboard at http://localhost:6333/dashboard#/collections.

Something unusual has happened. Even though the script processed all the messages without error, only 3 memories made it to the database. No memories about Tokyo or Toronto are in the memories collection.

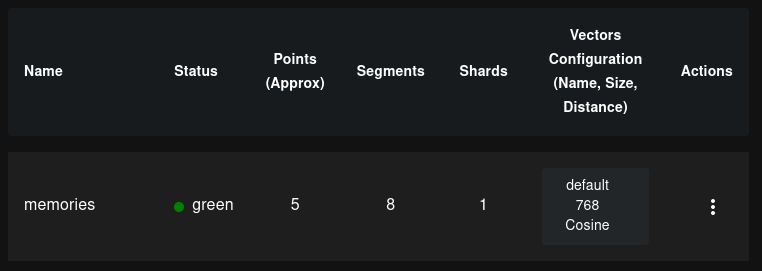

We’ll try this again with a different LLM setting. Delete the memories collection in your Qdrant instance. In your script file, change the config LLM model from “llama2” to “llama3.8b”. Run the script again.

This time, Mem0 saved all the statements to memory. Using a more powerful model helped Mem0 work with the memory data better. Mem0 uses an LLM not only to create a concise memory statement but also to determine what to do with that memory statement. You can check out the prompt for updating a memory in the Mem0 repo.

It is important to test out different models to see how well each works with the content your chatbot will handle. This is especially important if you allow people to select their own models or have a fallback model.

Searching Memories

So far, we’ve looked at adding memories for one user. However, your chatbot will need to handle multiple users interacting with it. The bot will need to save memories for a specific user and pull those memories back out when necessary. We’ll look at that now by handling 2 different conversations.

Delete the memories collection in Qdrant. Also, replace the travel location messages from earlier with the following two conversations. These conversations just represent the user messages. The assistant’s responses are not provided.

conversations = {

"user_1_conversation": [

"I'm going to visit Tokyo. What should I do?",

"I love to eat ramen. What kinds of ramen are in Tokyo?",

"Sometimes, I eat shoyu ramen, but my favorite it tonkotsu ramen.",

"What are some popular ramen places near Tokyo that are known for their unique flavor?",

],

"user_2_conversation": [

"I want to grow herbs indoors. What kind of tools do I need for that?",

"That doesn't seem too bad. My apartment doesn't have much space. How much space would a typical setup for a beginner take up?",

"I think I want to grow oregano. Is that possible?",

"What about chili pepper plants?",

"I'm not good at gardening, so I'll start off with oregano then.",

],

}

Adjust the way the script sends user messages to Mem0 by making sure it passes along both conversations and assigns the right user to each conversation.

for user in ["user_1", "user_2"]:

user_conversation = f"{user}_conversation"

for message in conversations[user_conversation]:

print(f"Adding: {message}")

m.add([{"role": "user", "content": message}], user_id=user)Finally, you can search the database for the most relevant results for each user.

answer = m.search(query="Where should I travel next?", user_id="user_1")

print(answer)

answer = m.search(query="Where should I travel next?", user_id="user_2")

print(answer)The results from this search will return the most relevant memories associated with a user. Mem0 returns a score for each result, which may indicate how similar it is to the original user query. When running this, I got the following results for each user. Note that your output may differ. The score for each result is pretty low. That is expected because User 1 focused on ramen, and User 2 focused on gardening.

# User 1

{'results': [{'id': '4c315d44-cb4d-4366-a539-ee5c6040118e', 'memory': 'Loves to eat ramen and Looking for types of ramen in Tokyo', 'hash': '72c6f7c059a50f7b5dcd9bd50bbf553a', 'metadata': None, 'score': 0.45501924, 'created_at': '2024-12-31T06:20:12.190528-08:00', 'updated_at': '2024-12-31T06:25:50.184943-08:00', 'user_id': 'user_1'}]}

# User 2

{'results': [{'id': '1baaaa78-a30c-4a1a-bd34-ebb9870748b9', 'memory': 'Will start with oregano', 'hash': 'dd995f100127dea197fe76a59a154c30', 'metadata': None, 'score': 0.45728984, 'created_at': '2024-12-31T06:42:56.879122-08:00', 'updated_at': None, 'user_id': 'user_2'}, {'id': 'e037826e-1173-411d-b0a4-47f47dc43183', 'memory': 'Wants to grow oregano', 'hash': '023b96e68f8eb7fb02c2911ca3dfa4aa', 'metadata': None, 'score': 0.3894324, 'created_at': '2024-12-31T06:37:22.555176-08:00', 'updated_at': None, 'user_id': 'user_2'}, {'id': 'f0e0a0e0-ebef-4416-a8d4-f60241c7e47b', 'memory': 'Not good at gardening', 'hash': '15736eaea5dbf3739be1e67bf2a17100', 'metadata': None, 'score': 0.30708656, 'created_at': '2024-12-31T06:42:56.858504-08:00', 'updated_at': None, 'user_id': 'user_2'}]}User 1 and User 2 didn’t talk about traveling very much. While User 1 did mention their upcoming trip, their main focus was on ramen. The first set of messages you worked with seems like it might be more related than those of User 1 and User 2. Try out those sentences about places traveled again. What are the scores for those?

Integrate Memories into LLM Calls

Now that Mem0 is keeping track of memories, you can use the relevant memories to help the LLM personalize its responses. User 2 talked about indoor gardening. Let’s consider that User 2 might want to re-engaged with the chatbot and ask “Aside from the plants we talked about, what other plants could I grow?”

Here is what the LLM output without memory. The chatbot doesn’t recall the previous conversation. It says as much due to its prompt. However, the response itself shows no awareness of “indoor gardening”, User 2’s initial focus on herbs, or User 2’s skill level.

I'm happy to help! Since I don't recall our previous conversation about plants, let's start fresh. There are countless plant species you can grow, depending on your climate, soil type, and personal preferences. Here are some popular options:

1. Fruits:

* Tomatoes

* Peppers (bell or hot)

* Cucumbers

* Strawberries

* Blueberries

* Raspberries

* Blackberries

2. Vegetables:

* Leafy greens (lettuce, kale, spinach)

* Broccoli

* Cauliflower

* Carrots

* Radishes

* Green beans

* Corn

3. Herbs:

* Basil

* Cilantro

* Parsley

* Dill

* Thyme

* Rosemary

* Sage

4. Flowers:

* Marigolds

* Zinnias

* Sunflowers

* Dahlias

* Roses (many varieties)

* Lavender

5. Grains:

* Wheat (for bread or animal feed)

* Oats

* Barley

6. Nuts and seeds:

* Almonds

* Walnuts

* Pecans

* Sunflower seeds

* Pumpkin seeds

7. Medicinal plants:

* Echinacea

* Goldenseal

* St. John's Wort

* Ginseng

These are just a few examples of the many plant species you can grow. Remember to research each plant's specific growing requirements, such as sunlight, soil type, and watering needs, to ensure success.

What type of plants are you interested in growing? I'd be happy to provide more information and recommendations!To add the memory Mem0 retrieved, you need to get the memories into a useful format and inject them into your prompt.

import requests

# The other code

previous_memories = "\n ".join([m["memory"] for m in answer["results"]])

previous_memories_prompt = ""

if previous_memories:

previous_memories_prompt = (

f"Here are some facts about the user: {previous_memories}"

)

data = {

"model": "llama3:8b",

"messages": [

{

"role": "system",

"content": f"You are a helpful assistant. {previous_memories}",

},

{

"role": "user",

"content": "Aside from the plants we talked about, what other plants could I grow?",

},

],

"stream": False,

}

answer = requests.post("http://localhost:11434/api/chat", json=data)After using memory, the LLM gave a better response. It remembered that User 2 was interested in growing herbs indoors. It specifically remembered that User 2 was interested in Oregano and mentioned other similar herbs they could grow. It did not mention large or difficult plants, even when it offered suggestions “beyond herbs.”

Since you're interested in growing oregano and want to garden indoors, here are some other low-maintenance, indoor-friendly herbs that might suit your taste:

1. Basil: Another popular herb, basil is relatively easy to grow indoors. It loves bright light, so place it near a sunny window.

2. Thyme: A hardy, fragrant herb that thrives in well-draining soil and indirect sunlight. You can even prune it to maintain a bushy shape.

3. Mint: Mint is notorious for spreading quickly, but you can contain its growth with regular pruning. Most mint varieties love bright light and moist soil.

4. Parsley: A slow-growing herb that prefers partial shade and consistent moisture. Use the curly-leaf variety for a more visually appealing display.

5. Chives: Another low-maintenance option, chives are relatively pest-free and don't require much maintenance. They prefer well-draining soil and indirect sunlight.

If you're willing to move beyond herbs, you could also consider:

1. Microgreens: These young, nutrient-dense greens are easy to grow indoors and come in a variety of flavors (e.g., pea shoots, radish greens).

2. Sprouts: Alfalfa, broccoli, or mung beans can be sprouted indoors using a jar or tray. They're nutritious, easy to care for, and mature quickly.3. Succulents: If you enjoy the structure and visual appeal of plants but don't want to worry about herb care, succulents are an excellent choice. They come in many shapes, sizes, and colors, and require minimal watering.Which one of these options resonates with you?Handling memory is a critical component of conversational experiences. An effective memory layer can help a chatbot use context about the user to improve its responses. Mem0 is a useful tool for integrating a memory layer into conversational AI projects.