Experimenting with generative workflows using Flowise, Ollama, and LangFuse

Generative workflows are getting more and more complicated as new tools and techniques come out. If we just look at retrieval-augmented generation (RAG), we can see that there are different types (graph, agentic, self, etc.) and techniques (hybrid search, HyDE, re-ranking, etc.) that can be combined to work with documents ranging from text documents to multi-modal documents. Experimenting with the wide variety of tools, techniques, settings, and architectures can become quite complicated. When prototyping, experimenting, and learning, it can be ideal to reduce that complexity.

Flowise is an open-source, low-code tool that helps people build out and test LLM workflows. It offers a simple drag-and-drop interface where you can test out tools and integrations before implementing them in an application. This makes it ideal for testing out prompts and settings, as well as getting an overall, visual understanding of what a workflow involves. Flowise primarily offers LangChain and LlamaIndex tools. While you don’t need to code, you will still need to have some understanding of these frameworks’ components to build with them in the platform, so it would be important to read the documentation. While it does have a paid cloud subscription service, you can run Flowise locally by building it from its repository or using their Docker image on DockerHub.

In this article, we’ll build out a simple chat flow that will track our conversations in Flowise. To support this application, we’ll use Ollama for local models. You can, of course, swap out to OpenAI, Anthropic, or another model provider should you want to. For analytics, we’ll connect to LangFuse.

Setting up Flowise

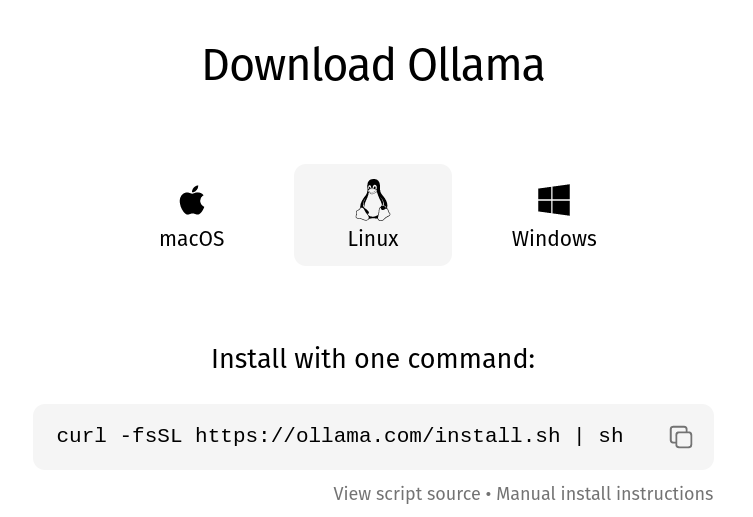

To get started, you will need to prepare your LLM model. To set up Ollama on your computer, you can follow the instructions on the Ollama website.

With Ollama set up, you need to pull models to your computer. You can pick models that are compatible with your system’s resources. If you don’t have much VRAM, you may want to use tinydolphin. If you have a comfortable amount of VRAM, you may be able to run gemma:2b. I’ll use gemma:2b throughout this article.

ollama run gemma:2bNow let’s set up Flowise. In their repository, Flowise recommends building from the source code locally. That’s advantageous if you’d like to make adjustments to the application or how it runs. To start, you will need to clone the repository to your computer.

git clone https://github.com/FlowiseAI/FlowiseThen, build a Docker image from the repo.

docker build --no-cache -t flowise .Next, run the image as a Docker container. The command to start the Docker container will differ depending on how you are connecting to the LLM. Flowise will look for Ollama within the Docker container by default, so we need to give it access to the local Ollama instance instead.

# Works with external APIs, like OpenAI or Anthropic

docker run -d --name flowise -p 3000:3000 flowise

# Works with local Ollama (+ external APIs)

docker run -d --name flowise --network host flowiseNow, you can visit Flowise in your browser at http://localhost:3000.

Building a ChatFlow

With Ollama and Flowise set up, we can build out a chat workflow. Click Add New to create a new project. You should see an empty canvas.

In the upper-left corner, click the + icon to open up the list of available tools. The tabs in that menu have tools from LangChain and LlamaIndex. The Utilities tab generally lets you write your own code to facilitate logic between steps.

In the menu, go to LangChain > Chains > Conversation Chain. Drag that onto the canvas. This node will facilitate back-and-forth exchanges with the LLM.

The Conversation Chain node allows you to configure how the exchanges work. One way to do that is to adjust the system prompt, which is in the Additional Parameters section. The other is to attach nodes (tools) to it. The Conversation Chain node requires a chat model node and a memory node.

For the chat model, we will be using Ollama. Go to + icon > Chat Models > ChatOllama. Drag node that to the canvas.

The ChatOllama node offers a variety of settings as well. The only one we need for our simple example is the model name. Enter gemma:2b.

For memory, we’ll just use a basic memory node. Go to + icon > Memory > Buffer Memory. Drag that to the canvas, too. We don’t need to configure that beyond the defaults.

Now, there are several disconnected nodes on our canvas. So we need to connect them together. Connect the ChatOllama node to the Conversation Chain’s chat model parameter. Similarly, connect the Buffer Memory to the Conversation Chain’s memory parameter.

That’s all we need to do to set up our simple chat app. Let’s save our work, so that we can chat with it. Click the save icon.

Enter a name for your app. Then, click Save.

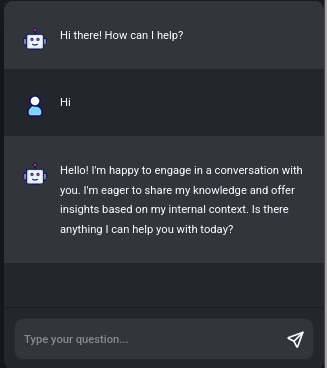

Now, you can click the message icon to open up the chat screen and send your first message!

Let’s add a bit more complexity. If our goal is to test out prompts, we’d want to preserve our chat records in an automatic way. We’d want to be able to preserve these to have them for comparison as we try out new prompts. To do that, we can use LangFuse, an open-source LLM engineering platform that can help us with analytics.

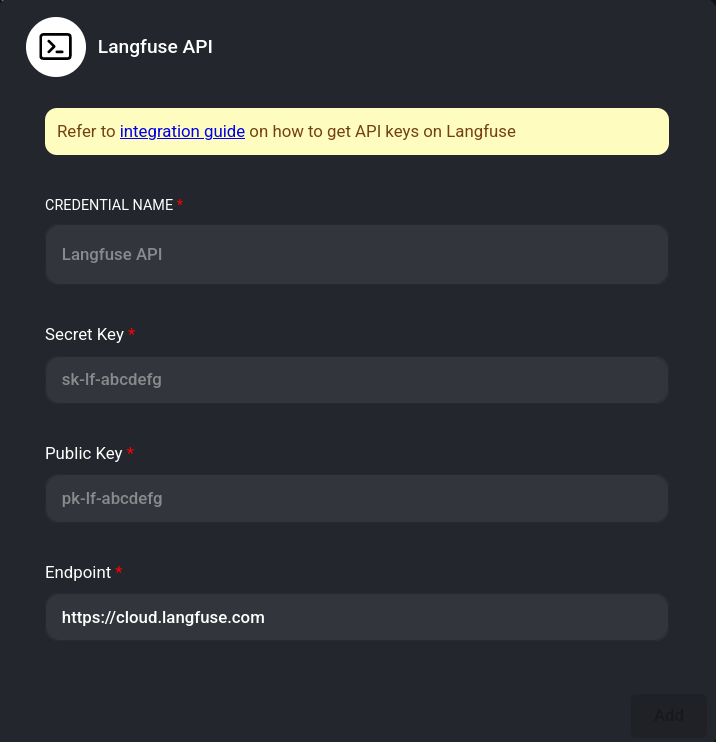

Flowise has an integration available in the settings options. To access the LangFuse integration, click the settings icon > Configuration > Analyse Chatflow > Langfuse. We’ll need to create a credential for LangFuse. To do so, click the down arrow and then Create New.

From LangFuse, we need to get a secret key, public key, and endpoint. You can get each of these by creating a LangFuse account.

Once you’ve set up LangFuse and gotten your credentials, add them to the form. Click Save. Your credential will be available, so select it from the Connect Credential field. Then, tag write a release version to help you keep track of your prompt experiments. Be sure to activate the integration.

Send another message in the chat.

It may take a few moments to appear, but LangFuse will record the interaction. You can click through the different information, such as the trace, span, and generation entries to understand what was sent to facilitate the exchange. See if you can find the prompt used, the message history with our first message, the model, and the latency In LangFuse’s records.

This was a good exercise to get started with Flowise. We don’t really need Flowise to handle this simple case, though. If we just want to chat with an LLM, we could use LibreChat. Also, LangChain and LlamaIndex both have many examples in their documentation for building out simple and complicated workflows.

Flowise offers some useful templates to get started with more complicated use cases. To see them, go back to your local home page in Flowise (http://localhost:3000/) and click Marketplaces.

The marketplace has many templates that you can use to experiment with different settings. One you might try is the Flowise Docs QnA (seen below). It offers a simple RAG that reads from the Flowise documentation repository.

This template uses OpenAI and reads from a GitHub repository.

How might you use Ollama instead of OpenAI? Hint: you may want to use nomic-embed-text for the embedding model.

How would you work with a file you uploaded from your computer?

What would the output to LangFuse look like with this setup?

Experimentation is important when exploring the different options available in generative workflows. From testing out settings to exploring different tools, you need to understand what makes a workflow strongest to get the best results with the best performance. The abstractions of a no-code or low-code platform can be confusing at times, and the canvas is not immune to becoming a mess. However, you often can see the entire workflow, which helps you understand how it works and what might benefit from revision. Working with this visual editor can help you prototype quickly to see what works best for your use case.