Introduction to Docker: part 1

Using Docker to work with conversational AI applications

While LLMs have gotten a lot of attention, conversational AI applications can make use of many components. These might include common tools like databases, APIs, or content management systems. However, your application might require more specialized services, like queues, search engines, or voice integrations. It’s worth playing around with a variety of tools to understand what they are, how they work, and when they might be useful.

However, it can be difficult to get started with a new tool or system. Each has its own installation steps and dependencies. As your application grows in complexity, the combinations of components (and their various versions) may require adjustments that aren’t well-documented. Also, there may be differences in using them on Linux and Windows. All of this can make it hard for you to experiment, learn, and develop in a productive way. Further, your team might have more difficulty collaborating. Docker can help conversational AI developers share and use applications.

Docker as a solution

Docker is a tool that can help developers experiment with, run, and deploy individual components and even full applications in a reliable, consistent, and easy way. A developer can create a list of build instructions in a Dockerfile. Then, Docker can use that Dockerfile to create a package that contains everything necessary to make some application run. This package, called a Docker image, contains things like the application code, dependencies, runtime libraries, configuration files, and even secret variables.

When one runs an image, Docker creates a container that can run an application locally or in the cloud. You can run Docker containers for applications or tools you want to try out on your local computer. The container acts as a pre-packaged version of an application that you can immediately start working with, little to no set up required.

However, you can also share your own applications with others. You can share images of applications with colleagues so that everyone’s using a consistent environment. Further, you can deploy your Dockerized applications to servers or cloud services to make your conversational AI project available to users. Because the Docker image contains all the necessary runtime instructions, the Docker container it creates can generally work on any operating system and across different environments that have Docker.

To get started with Docker, you can view its installation guides.

Example use case

Let’s look at how we might use Docker. A database is important for a conversational AI project because it allows an application to save information. This might include interaction data, chatbot scripts/messages, and files (like images). One type of data we might want to save to a database is embeddings.

Converting documents to embeddings allows you to do a similarity search between a user’s message and the stored embeddings to find an answer. This is a core concept of retrieval-augmented generation (RAG), which can help ground LLMs in “truth”. Generating embeddings can be time-consuming, and it isn’t something you’d want to repeatedly do every time you want to use a document. Saving the results to a database is more efficient. There are several databases we might want to explore for this. PostgreSQL is a popular open-source database that has proven reliable and flexible, and its PGVector extension makes storing embeddings easy.

Unfortunately, it can be hard to set up this database and extension locally. First off, installation steps differ between Windows and on Linux (postgres: Windows/ Linux ; pgvector). It might take around 23 commands to set up both postgres and pgvector on Linux. While these steps seem pretty clear, issues may arise that you will need to figure out. pgvector keeps a nice list of these issues and how to handle them. Other applications may not offer such guidance, or even document their installation steps well.

On the other hand, we can set up the database with Docker in 2 lines.

docker pull pgvector/pgvector

docker run pgvector/pgvectorAmazing! Now, you have a postgres database and the pgvector extension. What if you don’t want the most current version of the postgres database? Maybe you want to try different versions. In the case of pgvector, doing so is as simple as adding a version tag.

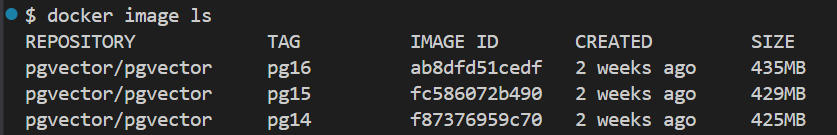

docker pull pgvector/pgvector:pg16

docker pull pgvector/pgvector:pg15

docker pull pgvector/pgvector:pg14To run the image, you need to supply a password. You can use any password of your choosing.

docker run -e POSTGRES_PASSWORD=password pgvector/pgvector:pg16With that up, you could start work on your application. You could test out how postgres works, set up some databases, integrate it with your application. And you’ve gotten to that point quickly because the application was already set up via Docker.

The pull request from earlier downloaded an image from DockerHub. There, you can see all the versions of postgres+pgvector and try out the ones that interest you. Often, though, repositories will have one or more Dockerfiles that can build an image. A Dockerfile is convenient to share because it’s smaller than the image. But there’s another advantage.

Docker images are generally meant to be immutable. If you’d like to change the build, you’d need to create a new image. You can build an new image using the Dockerfile. For example, the pgvector repo tells you how to build an image from their Dockerfile.

git clone --branch v0.7.2 https://github.com/pgvector/pgvector.git

cd pgvector

docker build --pull --build-arg PG_MAJOR=16 -t myuser/pgvector .With the Dockerfile, you can modify the build steps to create an image tailored toward your project’s needs. All of this may sound complicated, but it has some benefits:

Consistency - You and others can run your application the same way. You can generally use the same procedures to get something up and running.

Collaboration - A team can share resources and get set up faster. This lets them focus on their goals faster. Also, issues that arise are less likely to be related to any one person’s individual environment.

Flexibility - You can create your own Dockerfile to adjust the build to your needs. Also, you can use the version of an application most aligned with your requirements.

Separation - You can spin up a separate instance of an application for each project. This avoids conflicts between projects.

Let’s focus on that last one. If you’re working on multiple projects, using separate instances of applications can avoid conflicts. Conflicts between projects could happen both in their different requirements or dependencies and even in how they overlap. In the context of databases, sharing a table between projects could get confusing.

Even if you’re working on one project, you may benefit from having multiple instances. Again, in the context of a database, you may want two databases - one for chatbot content and one for analytics. You can easily create instances of your application from the same Docker image.

# Instance 1

docker run pgvector --name my-cool-app-postgres

# Instance 2

docker run pgvector --name my-amazing-app-postgresUseful commands

We’ve already seen some useful docker commands like pull, build, and run. But there are many other useful commands to work with images and containers. Here is a common workflow I’ve used when working with Dockerized applications.

# Get an application image

docker pull {application}

# Check that it is on your system

docker image ls

# Start a container

docker run --name {what-you-want-to-call-this-instance} {docker-image-name}

# Check that the container is running

docker container ls

# Use the terminal in the container

docker container exec -it {container-id} /bin/bash # Start a terminal session inside

docker container exec -it {container-id} ls # Example of running the ls command inside

# Stop the container

docker container stop {container-id}

# Remove containers

## Get a list of all non-running containers

docker ps -a -f "status=exited"

## Remove a specific container that's not running

docker rm {container-id}

# or

## Remove all unrunning containers

docker container prune

# Remove images

## Remove a single image

docker image rm {image-id}

## Remove all unused images (ones not associated with a container)

docker image pruneOf course, these commands have extra options, and Docker provides many other commands. These ones will help you get started.

Once you start using Docker, it’s so easy to get experimenting with different images that the number of images and containers on your system can grow quickly. It’s easy to forget what’s there. Since Docker artifacts contain everything you need for deployment, they can take up a significant amount of space on your machine.

As a result, it’s important to regularly remove what you don’t need. Adding the “—rm” flag when you run an image will delete the container once it’s stopped.

docker run --rm {image-id}Docker can be a useful tool for you to work with and share applications. In the case of conversational AI projects, Docker can help team members collaborate as well as get up a dev environment quickly. It can help you try out different tools that you are interested in without much risk of “ruining your system” or causing conflicts with other projects. In this blog, we’ve mainly looked at how to get existing Docker images and use them on our machine. In the next post, we will look at how you can package your own applications into a Docker image and run them.