Introduction to Docker: part 2

Creating your own Docker images for conversational AI applications

Docker can be a useful tool for working with applications quickly and for collaborating with peers. In the last post, we looked at how to run applications using Docker. When you are ready, though, you can also use Docker to share your conversational AI application with others.

Building a Docker image requires a Dockerfile, which contains a set of instructions to build an application step by step. A Docker image should contain all of the files and dependencies your application needs to run. That means it builds from the operating system to your application. Let’s think through the steps that you might need to go through to build a Python application. Your application will need:

an operating system

an installation of Python

a root directory for the project to live in

an installation of all the application’s dependencies

the application’s actual files

a start up command

As it grows in complexity, your application will require more steps, such as setting up secrets or default users.

Each of the steps in the Dockerfile builds a layer. If we were to run a Dockerfile with the steps above, there would be 6 layers. During the build process, individual layers are not changed. Instead, a new layer inherits all of the previous layer and makes any necessary adjustments. One benefit of this is that Docker caches each step. When you make a change to one step, you don’t necessarily need to rebuild every step of the process. That can save you some time.

The end result of building with a Dockerfile is a Docker image. The Docker image can be quite large due to all the files inside. It also may have secrets in it, so you would not necessarily want to share the Docker image itself. Instead, you would usually include the Dockerfile in your code repository. This allows people to make adjustments to the code and/or the Dockerfile to tailor the build for their purposes.

Setting up a demo application

We’ll use a simple FastAPI application to practice working with Docker. The API will just have one endpoint that takes in a request and outputs “Hello World!”. For more info on FastAPI, check out their introductory tutorial.

Add a requirements.txt with the fastapi dependency. Even though this application only has one dependency, your applications will likely be more complicated. Installing dependencies in Docker will be simpler with a requirements file.

fastapi[standard]Install the dependencies.

>>> pip install -r requirements.txtCreate a simple file called `app.py` with following code.

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello World!"}That’s it! You can run the application in a terminal window with the following command:

>>> uvicorn app:app --host 0.0.0.0 --port 8000Open a Python terminal (ex. type “python” or “ipython” in your terminal). You can make a request to the API.

>>> import requests

>>> resp = requests.get(url='http://localhost:8000')

>>> resp.content

b'{"message":"Hello World"}'Building a Dockerfile

Let’s add a Dockerfile to our repo. The Dockerfile does not have any special file extension like “.txt”. It’s just “Dockerfile”. Inside, we’ll write the instructions to build our application using a process very similar to the 6 steps outlined earlier.

The first part to add is the operating system. You can use any operating system that you’d like. It does not have to match your current machine’s system. Even with the operating system, though, there are a lot of steps to go through to install Python. That could be annoying to keep track of and clutters up the Dockerfile. Python has pre-configured Docker images with various versions of an operating system and Python together. How convenient!

Each Python version has a corresponding Docker image. However, each version has different subtypes with different operating system builds.

alpine - Uses Alpine Linux and is very small and security focused

bookworm - Uses Debian 12 Linux with fairly up-to-date files

bullseye - Uses Debian 11, which is older but perhaps more stable

slim - Uses a very paired-down version of Debian to reduce file size

Let’s use the alpine version since it’s small and fast. The following command will add both the Debian operating system and Python 3.11 to Docker. Steps 1 and 2 down in one line!

FROM python:3.11-alpineThe practice project lives in a particular folder on your machine. All the project’s files are there. In a similar way, you need to set the folder where the project’s files will live in the Docker image. The line below creates that folder and sets it as the working directory. You can use a different name than “app”.

WORKDIR /appWith the project folder set up, Docker needs to install the dependencies. You could add “pip install” commands to the Dockerfile to install each dependency one by one. As mentioned earlier, it’s more convenient to use the requirements.txt in the project folder would likely already have. In the Dockerfile, add two new commands - 1) copy the requirements.txt over and 2) add a command to run “pip install”.

COPY requirements.txt /app/requirements.txt

RUN pip install -r requirements.txtWith the dependencies installed, tell Docker to copy over the rest of the files into the project folder.

COPY . /appTo ensure the application is accessible, you need Docker to expose a port. If you run the Docker container in the cloud, you may need to check with the cloud provider to understand PORT options and requirements. For example, Google Cloud Run defaults to 8080. Usually you can adjust the port number to your preference. Let’s just use port 8000.

EXPOSE 8000Now tell Docker how to start the application. When the container runs, Docker will start your application using the command.

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8000"]The complete Dockerfile looks like this:

FROM python:3.11-alpine

WORKDIR /app

COPY requirements.txt /app/requirements.txt

RUN pip install -r requirements.txt

COPY . /app

EXPOSE 8000

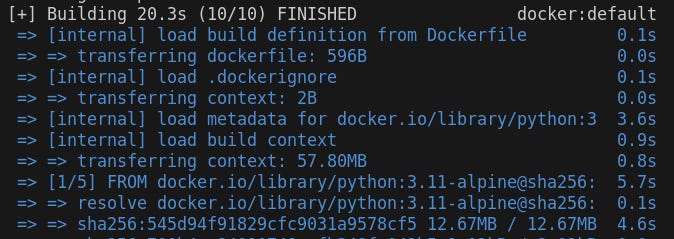

CMD ["uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8000"]Now you can use Docker to run your application. Enter the following command to build the Docker image:

docker build -t simple_fastapi_app:latest .Run the application to make sure it works.

docker run simple_fastapi_app:latestIf you try to make a request to the API again, you will get an error message.

>>> import requests

>>> resp = requests.get(url='http://localhost:8000')

ConnectionError: HTTPConnectionPool(host='localhost', port=8000): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f01e15d7510>: Failed to establish a new connection: [Errno 111] Connection refused'))That’s unusual, right? The Docker container is running on port 8000. The same request got a response before, but it didn’t connect to the API this time.

The application is running in a Docker container. That environment is separate from your local machine, so the application is not running on your local machine’s port 8000. “EXPOSE 8000” referred to the Docker container’s Debian OS port.

In order to connect your machine to the Docker container, you need to run Docker with another setting to map the ports (“-p”). The number on the left refers to the host machine’s port. The number on the right refers to the Docker container’s port.

docker run -p 8000:8000 simple_fastapi_app:latestYou could even map different ports numbers together. For example, I often run different postgres databases on my computer, all of which default to 5432. To keep the Docker containers separate from my local postgres, I map the Docker containers using “-p 5431:5432” and adjust the settings in my app to focus on port 5431. Generally, though, it’s better to map ports to the same number, like we did for the simple API.

Now, try to make a request to the system again. You should get the same result as before.

>>> import requests

>>> resp = requests.get(url='http://localhost:8000')

>>> resp.content

b'{"message":"Hello World"}'Dockerfiles provide a powerful way for you to share and work with your applications. Team members or other interested people can use the Dockerfile to build the application without necessarily learning how all of the individual steps work. They can also adjust the build easily if they need to. Overall, it lets them quickly get to work or get using the application. In terms of deployment, Dockerfiles allow you to share your application with Cloud services, usually via a Docker image. Most Cloud services can run a Dockerized version of your application. One benefit is that you can pick the Cloud service that meets your current needs without necessarily locking yourself in to that provider.

This simple application and Dockerfile are a great start, but most applications will be more complex than this. Indeed, it can be hard to get a Dockerfile to build your application correctly. One area that you will need to handle in most applications is the use of secrets. Up next, we’ll look more into how to incorporate an secrets values into the build process.